Key Takeaways

- "Clicking next" verifies attendance, not learning—completion metrics create a false sense of security

- Easy quizzes with unlimited retakes verify persistence, not knowledge

- Real verification tests application through scenarios and plausible wrong answers

- Spaced assessments over time reveal whether knowledge is retained or fading

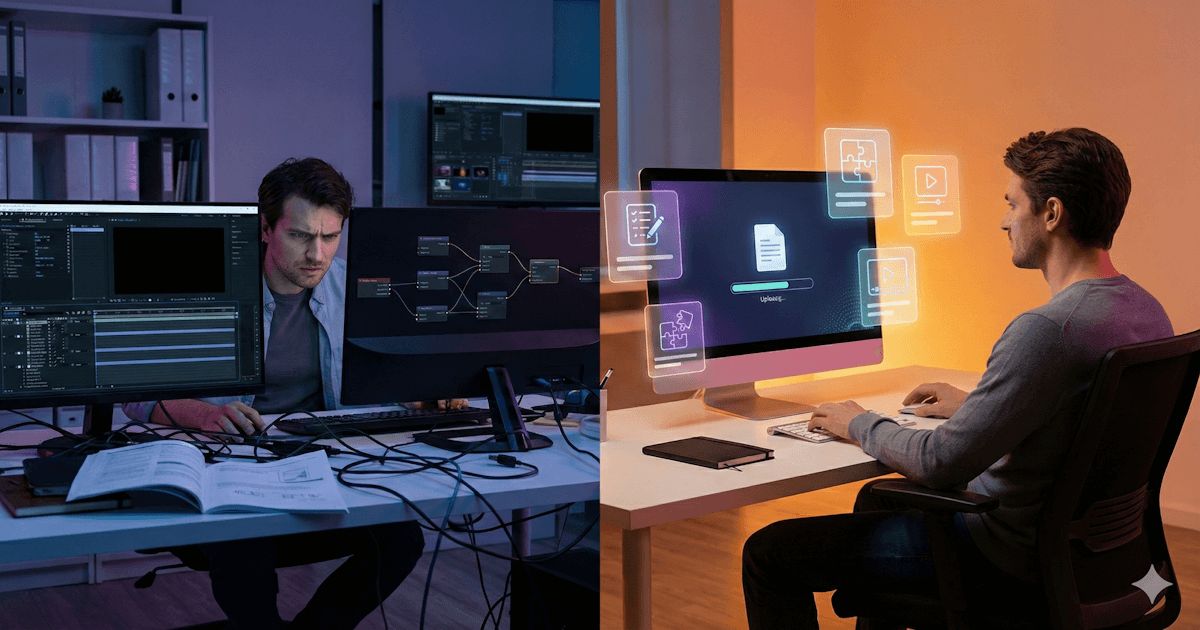

We've all done it. Click. Click. Click. Scroll to the bottom. Click next. Wait for the progress bar. Click next again. Answer the quiz questions that are obviously correct. Submit. Certificate downloaded. Training complete.

Did you learn anything? Maybe. Probably not. But you're marked as trained, and that's what mattered—at least to the system.

This is how most corporate training works. Completion is the metric. Did they finish? Did they pass the quiz? Check the box, move on.

But completion isn't learning. And the quiz at the end—with its obvious answers and unlimited retakes—isn't verification. It's theater. If you actually care whether people learned something, you need a different approach.

The Problem with Completion-Based Metrics

When completion is the goal, that's what people optimize for. They click through as fast as possible. They skip what they can skip. They guess on the quiz until they pass.

The training system shows 100% completion. Leadership sees green dashboards. Everyone feels good.

Meanwhile, the actual knowledge hasn't been transferred. The behaviors haven't changed. When the situation arises where someone should apply what they "learned," they don't—because they never actually learned it.

This isn't employees being lazy or malicious. It's a rational response to incentives. If the only thing that matters is completing the training, that's what they'll focus on. The system is designed for completion. It just doesn't get learning.

The Problem with Easy Quizzes

The end-of-training quiz is supposed to verify understanding. In practice, it rarely does.

Most quiz questions are too easy. The wrong answers are obviously wrong. The correct answer is either common sense or is phrased in a way that matches the training slides exactly. Pattern matching, not understanding.

Most quizzes allow unlimited retakes. Get it wrong? Try again. Keep trying until you pass. What this actually verifies is persistence, not knowledge.

Most quizzes happen immediately after training. Short-term recall is easy. The real question is whether someone will remember it next week, next month, when they actually need it. The immediate post-training quiz doesn't test that.

A 100% pass rate on your training quizzes doesn't mean everyone learned. It means the quiz is too easy or allows too many attempts.

What Real Verification Looks Like

Verification should answer the question: Can this person actually apply what they were supposed to learn? This is the essence of measuring training effectiveness beyond completion rates.

That requires a few shifts.

Test application, not recall. Instead of "what is the policy on X?" ask "here's a situation—what should you do?" Scenario-based questions reveal whether someone can use knowledge, not just recognize it.

Use plausible wrong answers. If the wrong answers are obviously wrong, the question tests nothing. Good distractors represent real misconceptions, common mistakes, or things that sound right but aren't.

Limit retakes—or make them costly. If someone fails a quiz, that's information. Either they didn't learn, or the training didn't work. Unlimited retakes hide this signal. Limiting retakes (or requiring re-training before retaking) makes the assessment meaningful.

Separate the quiz from the training. Immediate quizzes test short-term memory. A quiz taken a day later—or a week later—tests actual retention. Spaced assessments over weeks or months show whether knowledge is retained or fading.

Beyond Quizzes: Other Verification Approaches

Quizzes are simple to implement, but they're not the only way to verify learning.

Observation and feedback: Can a manager observe whether someone applies the training? A sales manager who sits in on calls can see whether the product training translates to real conversations. This is high-effort but high-validity.

Practical demonstrations: Before someone is certified on a skill, they demonstrate it. Show me how you'd handle this customer complaint. Walk me through how you'd use this system. AI roleplay scenarios can now simulate these practical tests at scale.

Work output review: Did their reports improve after the training? Did error rates decrease? Connecting training to outcomes is harder but more meaningful.

Self-assessment with validation: Ask people to rate their own confidence in applying what they learned. Then compare to actual performance. The gap between self-perception and reality is useful information.

The Organizational Challenge

Better verification sounds good in principle. In practice, it runs into resistance.

It takes more effort. Writing good questions is harder than writing easy ones. Limiting retakes means dealing with failures.

It reveals uncomfortable truths. When verification is meaningful, some people won't pass. Some training will be revealed as ineffective. The comfortable fiction of 100% completion rates disappears.

It requires different metrics. If you report on completion rates and they've always been 98%, switching to meaningful verification might show 70% competency. That looks like regression, even though it's just honesty.

Overcoming this requires deciding that actual learning matters more than the appearance of learning. That's an organizational decision, not just an L&D tactic.

A Practical Path Forward

You probably can't overhaul everything at once.

Start with high-stakes training. Compliance, safety, anything where getting it wrong has consequences. This is where the gap between completion and competence matters most.

Improve questions before changing systems. You can make existing quizzes more meaningful without new technology. Better questions, better distractors, fewer retakes.

Pilot spaced assessment. Pick one training program and add a follow-up assessment two weeks later. Compare results to the immediate post-training quiz. The gap will show you how much is being retained.

The point of training is learning. The point of verification is confirming that learning happened. "Click next to continue" confirms neither.

Real verification requires harder questions, less forgiving assessments, and the willingness to see uncomfortable results. It's more work. It's also the difference between training that changes behavior and training that just checks boxes.

JoySuite is built around verification that matters. Assessment that tests application, not just recall. Spaced reinforcement that confirms retention. Results that show you who actually learned—not just who clicked through.