Key Takeaways

- RAG (Retrieval-Augmented Generation) combines finding relevant information with generating answers—it's how AI assistants answer questions from your specific documents.

- Unlike ChatGPT on its own, RAG-powered systems are grounded in your content, reducing hallucination and enabling accurate answers about your organization.

- The key components are embeddings (understanding meaning), vector databases (finding similar content), and LLMs (generating answers from context).

- RAG quality depends on retrieval quality—the AI can only answer well if it finds the right content first.

When you ask ChatGPT a question, it answers from what it learned during training—internet text from years ago. It doesn't know your company's policies, your product documentation, or last week's announcement.

This is fine for general knowledge. It's useless for organizational knowledge.

RAG—Retrieval-Augmented Generation—solves this. It's the technology that powers AI knowledge assistants, enabling AI to answer questions from your specific content rather than just general knowledge.

This article explains how RAG works. You don't need to be technical to follow along—we'll cover the concepts in practical terms, then dive deeper for those who want more detail.

The Problem RAG Solves

Large language models (LLMs) like GPT-4 or Claude are trained on massive amounts of text. They're remarkably good at understanding and generating human language. But they have a fundamental limitation: they only know what they were trained on.

Your vacation policy? Not in the training data. Your product specifications? Not there. Your internal procedures? Definitely not.

You have two options:

Option 1: Fine-tuning. Train the model on your content so it "learns" your information. This is expensive, slow, and problematic for content that changes. Every time you update a policy, you'd need to retrain the model.

Option 2: Retrieval-Augmented Generation. Instead of training the model on your content, you give it your content at query time. When someone asks a question, you find the relevant documents and hand them to the AI along with the question. The AI generates an answer based on what you just gave it.

RAG is Option 2. It's faster, cheaper, and works with content that changes.

Analogy: Fine-tuning is like memorizing a textbook. RAG is like taking an open-book exam. The model doesn't need to "know" your content—it just needs to be able to read it when asked a question.

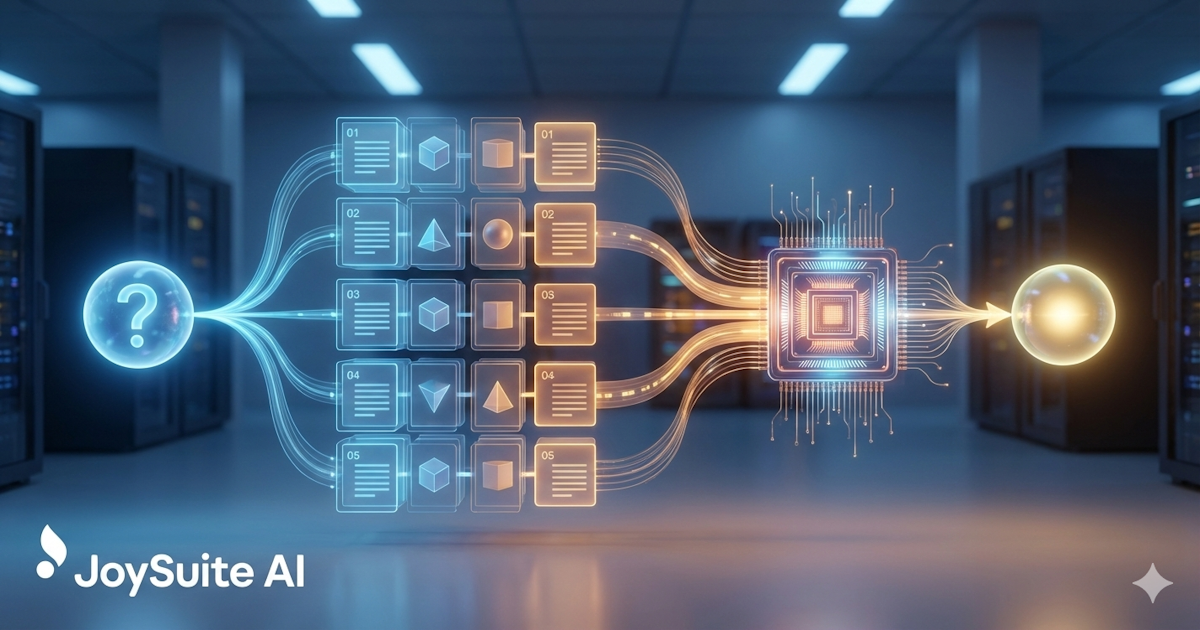

How RAG Works: The Simple Version

Here's the RAG process in plain terms:

- You store your documents. Your policies, procedures, documentation—whatever you want the AI to answer questions about—get processed and stored in a special database.

- Someone asks a question. "What's our parental leave policy?"

- The system finds relevant content. It searches your stored documents for sections most likely to contain the answer.

- The AI reads and answers. The relevant content is given to the AI along with the question. The AI reads it and generates an answer.

- You get an answer with sources. The response includes where the information came from, so you can verify.

The key insight: the AI isn't trying to remember your policies from training. It's reading them right now, in response to your question.

The Technical Components

For those who want to understand what's happening under the hood, RAG involves several technical components working together.

Embeddings: Understanding Meaning

Computers don't naturally understand language. The word "vacation" and "PTO" mean similar things to humans, but to a computer, they're just different strings of characters.

Embeddings solve this by converting text into numbers that capture meaning. Specifically, embeddings convert text into lists of numbers (vectors) where similar meanings produce similar numbers.

"What's our PTO policy?" and "How much vacation do I get?" would have similar embeddings, even though they share few words. This is how the system understands that they're asking the same thing.

Example: "King" and "Queen" would have similar embeddings—they're both royalty. "King" and "Keyboard" would have very different embeddings despite both starting with 'K'.

Vector Databases: Finding Similar Content

Once you've converted your documents into embeddings, you need to store them and search them efficiently. That's what vector databases do.

When someone asks a question, you:

- Convert the question to an embedding

- Search the vector database for document embeddings that are similar

- Return the most similar document chunks

This is "semantic search"—finding content by meaning rather than just keyword matching. It's why "How do I request time off?" can find a document titled "PTO Request Procedure."

Chunking: Breaking Down Documents

LLMs can only process so much text at once (their "context window"). A 100-page policy manual won't fit. So documents are broken into smaller chunks—maybe 500-1000 words each.

When you search, you're finding the most relevant chunks, not whole documents. This is actually an advantage: you give the AI exactly the relevant section rather than making it process pages of irrelevant content.

How you chunk documents matters. Too small, and chunks lack context. Too large, and you dilute relevant information with irrelevant text. The art of chunking is finding the right granularity for your content.

Large Language Models: Generating Answers

Once you've retrieved relevant content, an LLM generates the answer. The LLM receives:

- The user's question

- The retrieved content (the "context")

- Instructions on how to answer (the "system prompt")

A typical prompt might say: "Answer the user's question based only on the provided context. If the context doesn't contain enough information to answer, say so. Cite your sources."

The LLM then generates a response based on this combination—a natural-language answer drawn from your specific content.

Why RAG Beats Other Approaches

vs. Vanilla LLMs

Using an LLM without RAG means relying on the model's training data. For organizational knowledge, this means hallucination—the model will make up plausible-sounding but incorrect answers.

RAG grounds the model in your actual content. Hallucination risk is reduced (though not eliminated) because the model has real source material to work with.

vs. Fine-tuning

Fine-tuning trains the model on your content. This can work but has significant drawbacks:

- Expensive and time-consuming

- Needs to be redone when content changes

- Doesn't scale well to large document collections

- Can degrade performance on other tasks

RAG is more practical for most knowledge management use cases. Content can change, and the system adapts immediately.

vs. Traditional Search

Traditional search returns documents. RAG returns answers. The user experience is dramatically better—instead of scanning through search results and reading documents, users get direct answers to their questions.

What Makes RAG Good or Bad

Not all RAG implementations are equal. Quality depends on several factors:

Retrieval Quality

The AI can only answer well if it finds the right content. Poor retrieval—missing relevant documents or including irrelevant ones—leads to poor answers.

Retrieval quality depends on:

- Embedding model quality

- Chunking strategy

- Search algorithm sophistication

- Content coverage and organization

The best LLM in the world can't answer from content it wasn't given. Retrieval is the foundation—if it's weak, everything built on it suffers.

Content Quality

RAG can only answer from what's in your knowledge base. If your policies are outdated, the AI will provide outdated information—confidently. If you have contradictory documents, the AI may cite the wrong one.

This is the often-overlooked requirement: RAG makes your content quality more important, not less. The AI amplifies whatever you have—good or bad. Practical applications like AI for HR show this quality dependency clearly.

Prompt Engineering

How you instruct the LLM matters. Good prompts help the model:

- Stay grounded in provided context

- Acknowledge when it doesn't have enough information

- Format answers clearly

- Cite sources properly

Poor prompts lead to hallucination, unhelpful answers, or failure to use retrieved context effectively.

Chunking Strategy

How documents are broken into chunks affects what gets retrieved and how useful it is. The same document can perform very differently depending on how it's chunked.

RAG in Practice

Here's what a RAG-powered interaction actually looks like:

User asks: "What's the process for requesting a leave of absence?"

System finds: Three relevant chunks from your HR policy documents and employee handbook.

System sends to LLM: The question plus those three chunks plus instructions to answer based on the content.

LLM generates: "To request a leave of absence, submit a request through the HR portal at least 30 days in advance. You'll need to specify the dates, reason for leave, and whether you're requesting paid or unpaid leave. Your manager and HR will review and respond within 5 business days. For medical leaves, additional documentation from a healthcare provider may be required."

User receives: The answer plus citations showing which documents the information came from.

The entire process takes seconds. The user didn't need to search through multiple documents or know what terminology to use.

Limitations of RAG

RAG isn't perfect. Understanding its limitations helps set realistic expectations.

Hallucination Risk Remains

RAG reduces hallucination but doesn't eliminate it. The LLM can still misinterpret content, make incorrect inferences, or occasionally generate information not in the context. Understanding what grounded AI means helps set realistic expectations.

This is why citations matter—users can verify claims against source documents.

Retrieval Misses

Sometimes the right content exists but isn't retrieved. The question might use different terminology, or the relevant information might be spread across documents in ways that make retrieval difficult.

Context Window Limits

LLMs can only process so much text. Complex questions that require synthesizing many documents may hit limits on how much context can be provided.

Content Gaps

RAG can't answer questions about things that aren't documented. If the information isn't in your knowledge base, the AI can't find it.

Important: When the AI can't answer, you want it to say so clearly rather than making something up. Good RAG implementations are honest about limitations—but this requires careful prompt engineering.

The Future of RAG

The technology continues evolving rapidly:

Better retrieval methods. Hybrid approaches combining semantic and keyword search, re-ranking models, and multi-query techniques are improving retrieval quality.

Larger context windows. Models are handling more context, enabling answers from more sources simultaneously.

Multimodal capabilities. RAG is expanding beyond text to include images, diagrams, and other content types.

Agentic behavior. RAG systems are starting to take actions—not just answering questions but performing tasks based on retrieved knowledge.

Conclusion

RAG is the technology that makes AI knowledge management practical. By combining retrieval with generation, it enables AI to answer questions from your specific content—not just general knowledge.

The key insight: RAG doesn't make AI smarter about your organization. It gives AI access to your organization's knowledge at the moment it needs to answer. The AI reads your content and generates an answer from what it reads.

This simple architecture has profound implications. Knowledge that was trapped in documents becomes accessible through natural conversation. Questions that required searching, reading, and synthesizing can be answered instantly.

Understanding RAG helps you evaluate AI knowledge tools, set realistic expectations, and invest appropriately in content quality—the foundation that RAG builds on.

JoySuite uses sophisticated RAG architecture to deliver instant answers from your organizational knowledge. Connect your content sources, and let AI do the work of finding and synthesizing information—so your team can focus on the work that matters.