Key Takeaways

- Completion rates are comfortable vanity metrics that prove attendance, not capability

- To truly measure effectiveness, verify application and retention—not just immediate post-training recall

- Use scenario-based assessments, delayed testing, and observational metrics that track actual behavior change

- Measurement without action is just data collection—use it to create feedback loops that improve training

"95% of employees completed the training."

This is the number that shows up in most training reports. It looks good in a dashboard. It satisfies the question "Did people take the training?" It provides a sense of accomplishment.

It also tells you almost nothing about whether the training worked.

Completion measures attendance, not learning. Someone can complete a training while checking email in another window, clicking through as fast as the system allows, and retaining nothing. The completion rate stays high. The actual impact remains unknown.

We measure completion because it's easy to measure. The LMS tracks it automatically. It's unambiguous—either they finished, or they didn't. It's defensible when someone asks what the training investment achieved.

But if you actually want to know whether training is working, you need to measure different things.

The Incentive Mismatch

The question worth asking is deceptively simple: what changed because of this training? This is where understanding why teams forget training becomes essential.

Not what people sat through. Not what they were exposed to. What actually changed—in their knowledge, their skills, their behavior, their results.

This is harder to measure than completion. It requires thinking about what success looks like before the training is built, not after. It requires assessments that test real understanding. It sometimes requires looking at data that lives outside the LMS entirely. But it's the only way to know if training is worth the time and money being spent on it.

The Problem With Easy Quizzes

The most immediate thing you can measure is whether people actually learned what they were supposed to learn. This sounds obvious, but most training doesn't really do it.

The quiz at the end has questions that are too easy, with wrong answers that are obviously wrong. Everyone passes. The pass rate looks great. And you have no idea whether anyone can actually apply what was taught.

Real learning measurement requires assessments that make people think. Scenario-based questions where they have to apply knowledge to a situation, not just recognize a definition. Questions with plausible wrong answers that someone who didn't really understand might choose.

The Baseline Test: Try this: take your assessment and give it to someone who hasn't taken the training. If they can pass based on general knowledge alone, the assessment isn't measuring what the training taught.

What Real Verification Looks Like

Immediate post-training assessment has its own limitations. It measures what people know right after training, when everything is fresh. It doesn't tell you whether they'll remember it next week, next month, or when they actually need it.

Retention over time is a different question—and often a more important one.

Spaced assessments can answer it. A follow-up quiz a week later. Another one a month later. The gap between immediate performance and delayed performance tells you how much is sticking. If scores drop dramatically after a week, the training isn't producing durable learning.

Testing Application

Knowledge and retention matter, but the real question is behavior. Are people actually doing anything differently because of the training?

This is where measurement gets genuinely hard. Behavior happens out in the world, not in the LMS.

Sometimes you can observe directly. A manager who attended leadership training—are they running one-on-ones differently? A sales team that learned a new methodology—are they using it in calls? A customer service team trained on de-escalation—are their conversations going differently?

Observation is high-effort but high-validity. You're seeing what people actually do, not what they say they'll do or what they can recognize on a quiz.

Beyond Quizzes: Other Verification Approaches

Sometimes you can use proxies. Survey the people around the learner. Has your manager's communication changed? Is the sales team following the new process? Do colleagues see different behavior?

Sometimes behavior shows up in systems. Sales methodologies might be visible in CRM notes. Support interactions get recorded. Process adherence leaves traces. If you can connect training to behavioral data that already exists, you can measure without creating new overhead.

The most ambitious measurement connects training to business outcomes. "Support ticket resolution time improved 15% after the training" speaks louder than "95% completed."

The Organizational Challenge

Different types of training warrant different measurement approaches.

- Compliance: The question is often whether people can apply policies correctly. Use scenario-based assessments that test judgment.

- Skill-building: You want to see the skill demonstrated. Can they actually do the thing? Use role-play assessments and work product reviews.

- Knowledge: Retention matters most. Do they remember the information when they need it? Use spaced assessments over time.

- Onboarding: Time to productivity is the natural outcome metric. How quickly do new hires reach full effectiveness?

The Feedback Loop

Whatever you measure, the point is to create a feedback loop. Measurement without action is just data collection.

If assessments show that certain concepts aren't being learned, improve how you teach those concepts. If retention drops dramatically after a week, add spaced reinforcement. If behavior isn't changing, figure out what's blocking application—maybe it's the training, maybe it's the environment, or maybe it's incentives that conflict with what you trained. Consider whether different training formats might produce better results.

The best L&D teams treat measurement as a diagnostic tool. Not just proof that they did their job, but information that helps them do it better.

Completion rates aren't useless. They tell you whether people showed up, which is a prerequisite for everything else. But completion is the floor, not the ceiling. The real questions—did they learn, did they retain, did they change behavior, did results improve—require more effort to answer.

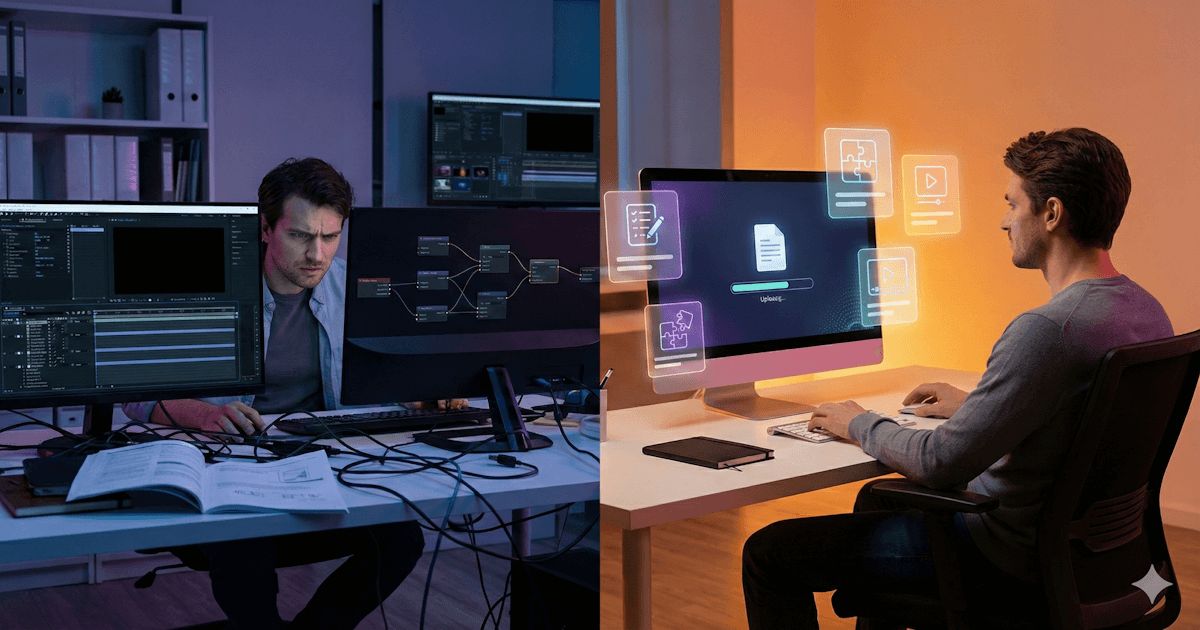

JoySuite builds measurement in. Assessments that test real understanding. Analytics that show what people know and where they struggle. AI-powered workflow tools help create assessments that reveal genuine comprehension. Data that tells you whether training is working—not just whether people clicked through it.