Key Takeaways

- The L&D market is saturated with AI promises, but not all capabilities are created equal

- Real and working today: grounded AI for instant answers, rapid content drafting, and scalable roleplay

- Overpromised: "magic" ROI measurement, fully autonomous instructional design, and personalization that's just filtering

- Implementation quality matters more than whether a vendor "has AI"—ask how exactly their AI works

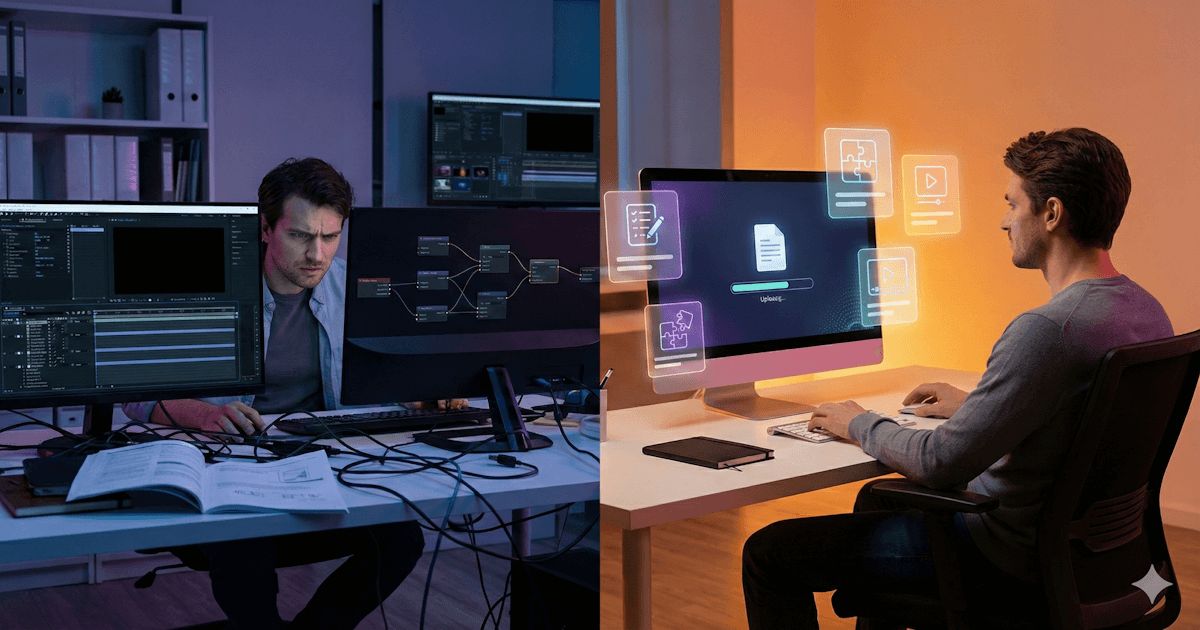

Every vendor in the learning space is talking about AI now. Every product has AI features. Every pitch deck promises transformation.

The word has become so ubiquitous that it's starting to mean nothing—a marketing term rather than a description of actual capability.

This makes it hard to know what to take seriously. Some of what's being promised is genuinely transformative. Some is incremental improvement dressed up in revolutionary language. Some is vaporware that doesn't actually work yet.

If you're trying to make decisions about where to invest—your budget, your time, your organization's learning infrastructure—you need to separate what's real from what's hype.

Real: AI Can Answer Questions From Your Content

This works today, and it works well. Upload your documentation, policies, product information, and training materials. AI can read it, understand it, and answer questions about it in natural language.

Someone asks, "What's our policy on returning items without a receipt?" The AI finds the relevant information, synthesizes an answer, and provides it in seconds. No searching through documents. No hoping you used the right keywords. Just an answer.

This is genuinely useful. It solves a real problem—the friction of finding information in organizational knowledge—in a way that wasn't possible before. The technology is mature enough to deploy today.

The caveat: quality depends entirely on the quality of your underlying content. AI can't give good answers if your documentation is wrong, outdated, or doesn't exist. It surfaces what you have; it doesn't create knowledge you don't have.

Real: AI Can Generate Draft Content Quickly

Need quiz questions from a document? AI can generate them in seconds. Need a summary of a long policy? Done. Need a first draft of a training script based on existing materials? Possible.

This compresses the work of content creation. Tasks that used to take hours can take minutes. Platforms like JoySuite offer content authoring tools that accelerate this process. The L&D team that used to be bottlenecked on production capacity can suddenly produce much more. Understanding how AI training content creation works helps you set realistic expectations for what it can deliver.

The Review Requirement: The important word is "draft." AI-generated content needs human review. It makes mistakes. It sometimes misses nuance. It can hallucinate details that aren't in the source. The workflow is "AI drafts, human reviews and refines, then publishes."

Real: AI Can Enable Practice at Scale

Roleplay and scenario practice used to require human partners. AI can now play the other role—the difficult customer, the resistant prospect, the employee receiving feedback. This is enabled through workflow assistants that simulate conversations.

People can practice conversations whenever they want, as many times as they want.

This doesn't perfectly replicate practicing with a skilled human coach. The AI doesn't have the judgment and experience that a great facilitator brings. But it's dramatically better than no practice at all, which is what most people were getting before.

The rep who's practiced an objection response twenty times with AI is more prepared than the one who practiced twice with a colleague.

(Illustrative example)Hype: AI Will Replace Instructional Designers

This gets said often, usually by people who don't understand what instructional designers actually do.

AI can generate content. It can't determine what learning experiences an organization needs. It can't diagnose performance problems and figure out whether training is the right solution. It can't navigate stakeholders, understand organizational context, or make judgment calls about what will actually work.

The production work—writing, building, creating assets—is getting faster and easier. The design work—figuring out what to build and why—still requires human judgment. Knowing when to use AI tools versus traditional authoring is part of that judgment.

Depends on Implementation: AI-Powered Personalization

The pitch sounds great: AI analyzes each learner's needs, adapts content in real time, and creates a perfectly personalized learning path.

When done poorly, this is pure hype. "Personalization" that amounts to recommending courses based on job title isn't transformative—it's a filter. Systems that claim to adapt but really just branch based on a single quiz score aren't delivering on the promise.

When done well, personalization is real and valuable. AI that understands someone's role and surfaces relevant content for their actual work. An assessment that identifies specific knowledge gaps and addresses them. Learning that adapts based on demonstrated understanding, not just stated preferences.

The difference is whether personalization is a marketing checkbox or a genuine capability. Ask vendors to show you exactly how their personalization works.

Depends on Implementation: Measuring Learning Effectiveness

Measuring whether training actually improved performance is hard. It requires connecting training data to business outcomes, controlling for other variables, and making causal claims in complex environments.

Some vendors suggest AI magically solves this. It will track what people learned, connect it to their job performance, and tell you the ROI of your training investment.

If the promise is a fully automated ROI measurement with no effort, that's hype.

But AI genuinely can improve measurement when implemented thoughtfully. Assessments that verify actual understanding—not just completion—give you real data about what people know. Analytics that show where learners struggle reveal what's working and what isn't.

Humility in Causation: The key is being realistic about what you're measuring. AI can tell you whether people learned what you taught them. Connecting that to business outcomes still requires thought, integration, and appropriate humility about causation.

Depends on Implementation: AI as Tutor

The dream is an AI that acts as a personal tutor—explaining concepts, answering questions, guiding someone through material the way a skilled human teacher would. Always available, infinitely patient, adapting to each learner.

Overpromised versions of this don't deliver. Chatbots that give generic responses. Systems that can answer simple questions but fall apart on anything complex. "Tutoring" that's really just a search interface with a conversational wrapper.

But AI tutoring that's grounded in your actual content works remarkably well. When someone can ask a question about your product, your policy, your process—and get an accurate, contextual answer drawn from your knowledge base—that's real tutoring.

The Honest Summary

AI is genuinely useful for L&D today. It's changing what's possible for knowledge access, content creation, and practice at scale. These are real capabilities you can deploy now. For a comprehensive look at what's working and how to implement it, see AI for Learning and Development: The Complete Guide.

But there's a wide gap between AI done well and AI done poorly. The same capability—personalization, measurement, tutoring—can be transformative or worthless depending on implementation.

The organizations getting value from AI in L&D are the ones asking hard questions. Not "do you have AI?" but "how exactly does your AI work, and what evidence do you have that it delivers results?"

JoySuite uses AI for what it's good at—and implements it well. Answering questions from your knowledge, grounded in your actual content. Assessments that verify real understanding. Practice at scale. Personalization based on role and demonstrated knowledge.